*** UNDER CONSTRUCTION ***

With the release of the Pi Video Camera it became practical to actually configure the Pi as a basic 'motion recording' CCTV camera. Then the release of the B3 provided (just) sufficient processing power to allow (at least zone) 'motion detection' on the Pi. In mid 2017 the PiHut launched the 'ZeroCam', a (slightly) price reduced (£15) 5mPixel camera 'built around' the Pi Zero interface cable (and identical to the "Pi Zero Mini Sized Camera Module" unit available from UK seller 'mr.craig-lewis' via eBay, for £6 + 0.96p postage) that alllowed a more compact Pi Zero + camera assembly.

Commercial CCTV - from (useless) CIF to (poor quality) 960H

The original, now 'classical', CCTV quality 'CIF' camera resolution is next to useless. These cameras delivered '1/4 NTSC TV' resolution (i.e. 240x320 pixels), sufficient only to show that 'someone' was passing the camera and the chances of identification being essentially zero. However 4 CIF camera 'feeds' could be recorded onto a single (NTSC) video frame and thus direct to NTSC analogue Video Tape recorder.

The CCTV industry has 'moved on' from the original CIF (quarter NTSC TV resolution) 360x240 systems via the D1 (720x480 = full NTSC) standard to a new standard they call '960H' (960x480). This 'standard' is actually 'quarter HD' (i.e. 4 cameras each generating 960x480 pixels combine to a single HD 1920x1080 frame).

The CCTV vendors who charged mugs a premium price for a NTSC VHS tape recorder (plus 4 useless CIF cameras) are now charging an arm and a leg for what is simply a 4 channel analogue to single channel HD digital converter 'front end' together with a standard HD TV recorder ... along with 4 (almost useless) "960H" (quarter HD resolution) cameras.

So rather than muck about with quarter resolution analogue data (and pay through the nose for some hopelessly buggy proprietary 'CCTV system' software), you are 4x better off with a simple digital HD camera sending h.264 to your computers hard drive (which can be whatever size you need) using the Open Source iSpy software. Even 'half HD' (1024x720, 0.74 Mpixels) would be 60% better than the CCTV '960H' (960x480 = 0.46 Mp) standard - and full HD (1920x1080 = 2.1 Mp) gets you 4.5 times higher quality than a 960H CCTV system. However if you use the 5Mpixel Pi camera** to take a series of full frame MJPEG images, at 2592x1944 pixels this more than ten times the quality of commercial 960H CCTV (and makes it at least 10x more likely that an intruder will be identified from your images) **The 'Mk2' Pi camera module, at 8 Mpixels, delivers even higher resolution photos (3280 x 2464 pixels)

Home or 'amateur' CCTV is streets ahead of almost all 'commercial' systems (even those costing 10x more). Whilst this is mainly because we can use our entire bandwidth for one or 2 cameras (whilst a typical CCTV system will have at least 4 cameras) more than anything else it's down to the availability of the low cost camera such as the Pi A+ (and Pi camera). Now that the Pi Zero v2 is camera capable the cost of a DIY full HD Ethernet connected camera is less than £30.

Why we need 'smart' CCTV camera

Normal commercial CCTV records hours of 'nothing happening' along with hours of normal activity by hundreds of customers. The quality of the recording is usually so poor that even when they capture 'someone acting suspiciously' it's virtually impossible to detect what they are doing let alone actually identify who they are.

The home user doesn't have to 'prove' that 'nothing happened'. So rather than record hours of poor quality garbage, what we want is a some high quality images of actual visitor activity - and since the average home doesn't have hundreds of visitors each day this won't occupy much storage space

The most important part of a home CCTV system is the quality of the images (if the thief can't be identified, you will never get your 'stuff' back). This means poor quality 30fps 'video' is 'out' and high-quality MJPEG (i.e. a stream of jpeg images) is 'in' even if it's only able to take photos 3 to 5 times a second. What's more, to avoid wasting bandwidth and storage on 'nothing happening' we need a 'smart' camera system - one that generates high quality images but only stores (or transmits) the 'useful' ones. So whilst the camera will be 'running all the time', images will be saved to a 'temp' store where they are processed using some sort of 'motion detection' (or 'change detection'). Only the 'useful' images need be transmitted to our CCTV storage 'server'.

One thing a home system has to cope with is the possibility that an intruder might cover or disable the camera. This means the system needs to save the images taken just before 'intruder detection' (because there might not be any images 'afterwards' :-) )

In fact it's not a bad idea to fit one or more 'dummy' cameras for the intruder to 'attack', whilst at the same time 'hiding' the 'real' camera nearby (so you get some nice full-face mug shots of the morons as they try to disable your dummy camera)

Live view

Often you will want to view the camera output in 'real time' ("who's at the door" mode). Whilst it's possible to have the Pi send a h264 video data stream via Ethernet to your 'server' (and use VLC to 'view' the stream) this will significantly impact the Ethernet bandwidth available for saving the 'full resolution' images

Chances are we will need to dedicate the full Ethernet bandwidth to saving only the 'wanted' high quality images (5fps full frame JPEG's is 96 mbs) at the same time as Live View. So another path has to be found - and fortunately, the Pi provides just that .. it's analogue TV (RCA socket) output !

Just like a 'normal' CCTV camera, you can run a 30m co-ax cable off the Pi RCA output to the 'AV input' of your TV sitting in your lounge (or your PC monitor (many have an 'AV in' RCA socket on the back). You can get the Pi to use the RCA socket to display the raw image 'ring buffer' (ram-disk) in a 'live view' (or 'slide show') mode. Although all video output from the Pi is generated by the GPU - so can be 'any' resolution you like - when using the RCA output you can only choose from a number of 'presets' = in effect either PAL or NTSC 'TV'. However even NTSC is 4x better than normal CCTV standard 'CIF' (1/4 NTSC) - and if you are going to 'record' this feed (using the 'AV' input to a 'normal' PAL TV system) there is no point running the RCA analogue at anything above PAL resolution anyway

If you are just using the TV feed for 'live view' = and intend to 'save' the camera images as digital files, there is nothing stopping you from running the camera at max. resolution (in MJPEG mode) and just 'viewing' the received images via the TV screen 'at the same time' (in effect, running the Pi in 'slideshow' mode

CCTV image storage

Professional systems typically keep up to 30 days of CCTV 'footage'. Our home system needs to keep at least the same - more, in fact, if you (or your neighbours) take extended holidays

The Pi camera is capable of 30fps full HD (1920x1080 with GPU h.264 encoding), or 15fps in still image mode (although it can only manage 5fps at 2592x1944 full frame)

A typical full frame JPEG image is about 2.4MB, which means you can expect to get about 425 photos per GB. At 5 frames/second, that's less than 90s, so even if we could record** at that speed a camera with a 32Gb SDHC card would run out of space in 45 minutes. ** a Pi B+ with a Class 10 SDHC can manage about 3fps full frame to the SDHC (and about 4fps to a ram-disk) Early Pi systems managed only about 30mbps across it's Ethernet (about 2fps), although it's reported that the Jessie system is (apparently) capable of almost 3x that

To reduce the storage requirements, we can drop the resolution or fps, however the best way is to eliminate 'duplicate' images (i.e. drop photos that are 'same as the last').

As we will see later, we can run the camera to ram-disk and then 'save the interesting images' (only) to SDHC and finally off-load from the SD card to the LAN.

A Pi camera based CCTV system

Building a practical system is more about hardware than software

1) Powering the Pi + camera

To minimise the wiring we use PoE = Power-Over-Ethernet (yes, for a truly stand-alone unit we would like to use battery power + WiFi, however the likely level of continuous power consumption** will probably rule this out)

** Since we ideally want some sort of 'motion detection', and the higher the resolution (and frame rate) the better, the Pi CPU is going to be running at something like 90%+ load (assuming we want motion detection at better than N second speeds - see later) Additionally, if each Pi camera uses 90% of it's 100mbs Ethernet link, a Gigabit Ethernet router allows at least 10 cameras each on it's own 100mbs dedicated cable link (where-as WiFi is likely limited to 150mbps at best, and that has to be 'shared' by everything at the same time = so, if you have 10 devices, they will get 15mbs each). So it makes sense to run PoE down a dedicated Ethernet cable (rather than run mains to a WiFi equipped Pi).

(-) Power over Ethernet - (PoE)

How to power a Pi via an Ethernet cable (PoE)

PoE (in 'Mode B') makes use of the 2 'spare' pairs of wires in a 10/100 standard Ethernet cable. These 2 pairs (pin 4,5 = DC V+, and pins 7,8 = 0v) are 'not used' for 10/100 data transmission, however they ARE used for Gigabit data transmission. The Pi, however, only supports 10/100 (directly or via a USB-Ethernet 'dingle'), so pins 4,5 (blue, blue/white) and pins 7,8 (brown, brown/white) will always be 'spare' - however using them is not as easy as you might think (see Pi model B Ethernet socket issue, below) 'Professional' PoE solutions cost the usual 'arm and a leg', with 37-57v sent down up to 100m of cable (see here for 'proper' PoE specs). Plainly 'aimed' at the Corporate user base, the DC-DC converters required are ludicrously expensive (the 'cunning use' of a 37v 'minimum' is plainly designed to prevent the use of cheaper regulators, which are limited to 36v max). Whilst 'professional' PoE DC-DC converters are prohibitively expensive, if your 'DIY PoE' limits itself to less than 36v (rather than 38v+ used by 'professional' solutions) you can use 'off the shelf' converters. Even the Farnell (6.5-36v to 5v, 1A for about £4) are a fraction of the 'professional' PoE cost. If you are prepared to wait (and are OK with an open PCB product), it's even cheaper from China via eBay ("1/5PCS LM2596S DC-DC Power Supply Buck Converter Adjustable Step Down" 40v in, 2A out, 5cpcs for £3.69 so 74p each) If your cable length can be kept under 50m, a 24v 'feed in' supply voltage can be used ... and for cables of 25m or less, at the typical power consumption level of a Raspberry Pi (even with camera, it's less than 500mA at 5v = 2.5w), a feed-in voltage of 12v (so 2.5w = 210mA) should work just fine (a 12v to 5v (1.8A continuous current rated) DC-DC converter can be had for 68p (Qty 5 off) from China (via eBay)) When going for a DC-DC converter at the Pi, look for a 'buck' converter rather than a 'linear' type (sold by some expensive UK High Street chains, such as Maplins), especially if you are driving more than about 18v down the PoE wires, since (for a 'linear' converter) the higher the 'input' voltage, the higher the heat dissipation.

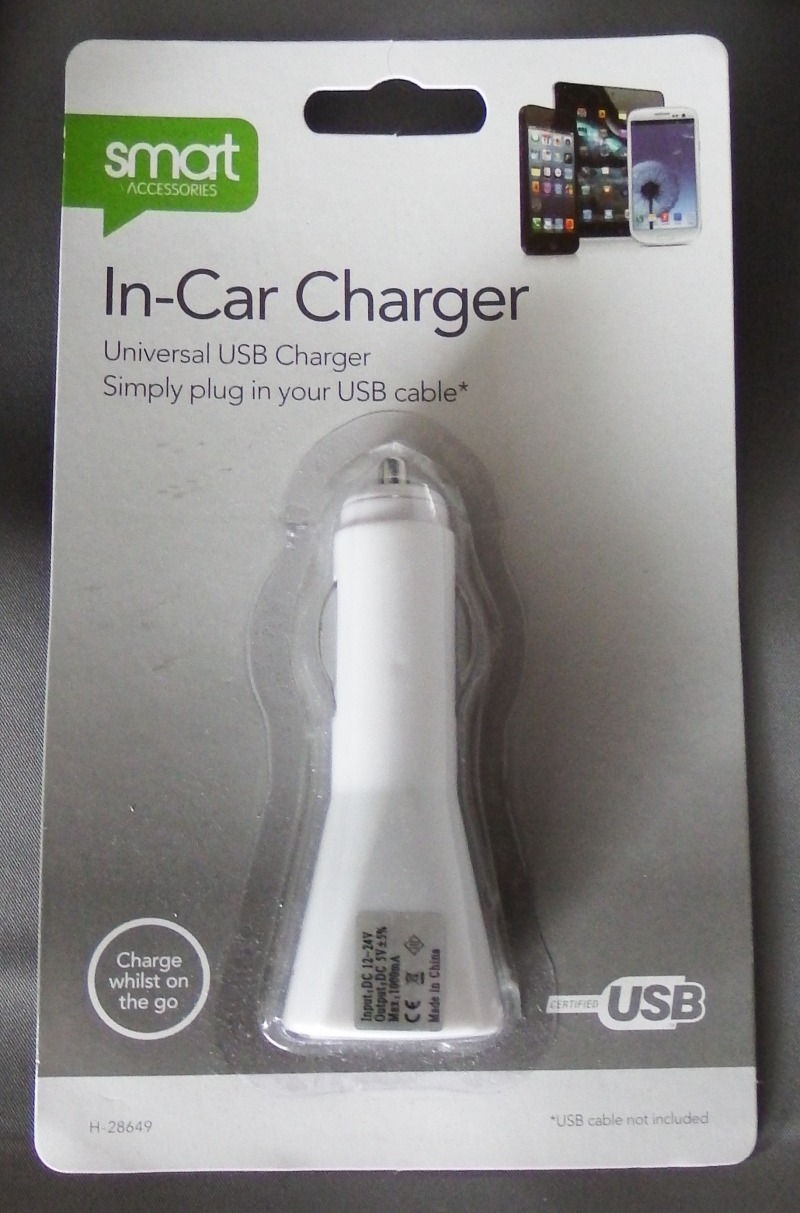

You can find 'buck' style converters in almost every 'USB car adapter'. Those (such as the 'Smart' adapter) rated for 'car/lorry' use (12v/24v) can be found for a couple of quid (including micro-USB plug cable) from eBay.

Be warned that most of those found in the £1 stores only output 500mA, although this should be (just) enough for the Pi Zero/A+. More to the point, many cheap ones are only 12v input limited - so check the specification 'sticker' carefully before using them for 24v PoE :-)

Whilst the 'car USB charger' will give you the required 5v 'steady state' voltage, many fail to cope with the Pi's wildly variable current demands. This can be addressed with a decent 'reservoir' capacitor (100uF, 6v) at the output of the converter, although you may have to use shielded Cat6 Ethernet cable (to stop it acting as an aerial)

Of course you can buy a 'real' PoE 'HAT' - see, for example, one from RS components (for the rip-off price of £25, for which you get the Pi HAT only, no cable, no 'injector' no 12/24v PSU). Since it's specified to support an input voltage of 36-56v, plainly RS expect you to pay another £25 for their PoE power 'injector' power block (remember, the 36v PoE minimum requirement has been carefully chosen to prevent the use of cheap 24v sources, and for sure to prevent the use of the 'dedicated' 12v PoE injectors that can be found on eBay for less than £5)

Using a PoE hub

Don't bother = all PoE hubs are (of course) limited to 100mbs (a Gigabit hub uses the PoE wires for 1000mbs transmission), and (even on eBay), a PoE hub is at least 3x the cost of a Gigabit hub with the same number of ports. Since it costs only £1 for a PoE 'power injector' (or PoE 'split off') adapter and less than £2.50 for a 12v power-block (eBay), it makes no sense to pay £10 a port extra for a PoE hub. Note that the 4+1 port PoE hub rated at 10/100 can only support an 'up-link' data rate of 100mbs. When spread over 4 'down-link' ports that's 25mbs each. If one 'down' port uses the full 100mbs, the other ports must get 0 ! However a 4+1 port Gigabit hub will support an 'up-link' data rate of 1000mbs - spread over 4 ports that's 250mbs each. That means all 4 'down-link' ports can be run at a full 100mbs each (or one at 100 and the other 3 at 300mbs each :-) )

The Pi model B (B/B+/B2,B3) Ethernet socket issue

The problem with the Pi B series is that the designers have chosen a 'clever' Ethernet socket that has internal circuitry which shorts pin 4 to 5 and pin 7 to 8 (so far so good) but then feeds them via some sort of internal resistor / capacitor network into the 10/100 receiver transfer circuits !! (see page 3 of the Pi circuit diagram). This, of course, means any 'noise' on these wires (as would be caused by using them to power the Pi) will be 'injected' straight into the Ethernet data wires leading to data corruption and a massive deterioration in throughput. So, in order to use a B series Pi with PoE, you have to 'split off' the pins used for PoE (4,5 and 7,8) from the incoming Ethernet cable before it reaches the Pi (PoE adapters exist or you can physically cut open the cable). The final choice - replacing the socket - risks damaging the Pi motherboard. Of course you also need to 'feed in' the PoE DC voltage at the LAN end - and an Ethernet hub with 'real' (48v) PoE costs an 'arm and a leg'. You can find 12v or 24v PoE hubs on eBay for not much more than one without, however most have only 4 sockets, many are limited to 500mA per socket and (of course) all PoE hubs are limited to 100mbs. By far the best solution (and the only solution for those using Gigabit hubs) is a pair of PoE 'split in/out' adapters. These can be had on eBay for £2 the pair (or more than double that from a 'Pi shop'), and an off-the-shelf 2A+ 24v 'power block' can be found for less than £5 (or 12v 2A for less than £2.50) if you search around on eBay.

Pi A/A+ PoE

The Ethernet socket is not fitted to the A, so there is plenty of space to 'stick' a DC-DC converter (the output of which can be wired to the USB power-pins, although you will have to bridge the USB 'reverse protection' diode). Assuming you want to 'talk' to the Model A Pi by Ethernet (why else did you run the wires ?), you will then need a USB to Ethernet 'dongle', about £2.50 from eBay (the Ethernet comms chip (LAN9512) is simply not fitted to the Model A) = see below. The plastic case of most 'USB to Ethernet' dongles can be popped open (the rest can be cut open) allowing you to 'get at' the PoE pins inside - indeed in some cases it's even possible to add a DC-DC regulator inside the dongle ! I've not (as yet) found any 'dongle' using the same sort of 'clever' Ethernet socket (that feeds noise from the PoE wires into the 100mbps pins) as used by the Pi B's, however if you do run into one then you will need a PoE 'break out' adapter between the incoming Ethernet cable and the USB dongle. The output of the converter then has to be fed to the Pi micro-USB power socket - or better, you can 'back drive' the Pi from the dongle

Pi Zero PoE

As per the A/A+, however, the Pi Zero (which effectively replaces all but the Pi B3) has the advantage that you can power it straight from it's USB data socket without even needing to add wires or bridge diodes !

So PoE is actually a lot easier to use with the Pi Zero (even compared to the A/A+). Just open up the USB to Ethernet 'dongle', wire the incoming PoE (pins on the Ethernet socket) to the DC-DC converter, then wire the 5V (output from the converter) direct to the micro-USB 'feed in cable' pins = and case closed !

Note. For the Pi Zero almost any 'off the shelf' 12/24v car/lorry 'cigarette lighter socket' USB power adapter can be dismantled and the insides will often be small enough to fit inside an 'Ethernet-to-USB' dongle (especially after de-soldering the full sized USB socket).

However, if you are using an 'Ethernet+3port hub' dongle, then the typical car adapter (rated at 500mA) is not going to deliver enough power (3 ports at 500mA each = 1.5A !).

So you will need a 12/24v DC to 5v DC converter rated at 2A, and these are actually cheaper than the Poundshop 'car adapter' ('5PCS DC-DC buck adjustable step down Regulator' can be had for £3.40p, eBay = so 68p each). The PCB width is only 11mm (length 17mm) so these will fit within most USB Ethernet 'dongles' (they are rated for a continuous current of 1.8A, same as the 'headline 3A' product).

In short, the ease of using it with PoE is just one more reason to stick with the Pi Zero.

This note last modified: 29th May 2018 14:36.

Any mains-powered PoE system has to anticipate possible criminal action to disable the power. To cope, you need 'battery back-up' aka a 'UPS' - for full details, see my Pi UPS page, for an overview, see below :-

(+) CCTV UPS requirements

An alternative to PoE is Solar + WiFi :-

(+) Solar Power - (with WiFi)

A Solar Power solution comes it at over £100 for a unit positioned within WiFi distance. A 'comparable' PoE solution is around £30 (£10 for a basic PoE, £10 for 30m of Ethernet cable £10 for a basic UPS).

Unless cost is irrelevant (or running cables really is impossible) you should stick to PoE

Providing night-time illumination

(+) iR illumination

2) Enable the Camera

After plugging in the camera, launch the "raspi-config" tool from the Terminal window :-

sudo raspi-config

Select 'Enable camera' and hit 'Enter', then go to 'Finish' and you'll be prompted to reboot.

The Pi camera has a bright red LED that glows when the camera is actually taking photos. If your camera is intended only as a 'deterrent' this is an advantage - however if it's for 'evidence gathering' you don't want to give the criminal visual clues that might help him avoid being 'seen' (or worse, highlight the camera position so it can be covered, repositioned** or destroyed).

** Serious criminals will always find some excuse to visit you during the day, so an 'evidence gathering' camera must be hard to 'spot', hard to avoid and hard to access (even the dumbest criminal will try to move or cover up a camera that's within easy reach). So don't assume that you only need the camera to run at night.

Of course, should the 'no motion' (static) image suddenly change - due to the camera being covered, repositioned or ripped of the wall - it would really help if your software sounds some sort of 'alarm' (if the criminal returns the following day to find their tampering has been ignored, you can be sure they will be back again after dark ...)

So, to stop the LED coming on every time the camera is 'active', modify the /boot/config.txt file by adding the following :-

disable_camera_led=1

Enable TV out

By default, all Pi now distro's use the HDMI output. To use TV out (RCA socket), from your PuTTY SSH terminal window (log in 'pi', password 'raspberry'), you edit (sudo nano) "../../boot/config.txt" file and reboot.

Make sure to select a 'SDTV mode' and 'SDTV aspect' :- # Normal PAL=2 (NTSC=0) sdtv_mode=2 # set 4:3 (others are sdtv_aspect=2 (14:9), and sdtv_aspect=3 (16:9)) sdtv_aspect=1 Now disable HDMI (i.e. comment out the enable) :- #hdmi_force_hotplug=1 #hdmi_drive=2

Note, /boot/config.txt only applied after reboot ...

Disable the 30min screen blanking 'screen saver'

From PuTTY you can use the 'sudo setterm -blank 0 -powerdown 0' command, however that only applies for the 'current session'. A more permanent solution is to edit (sudo nano) the file "/etc/kbd/config" and change the following entries to the values shown :-

# blank 0 means never BLANK_TIME=0 # DPMS is the energy star garbage, no effect on TV out, but turn it off anyway in case you test with HDMI display BLANK_DPMS=off # powerdown 0 means never POWERDOWN_TIME=0

Save (write Output using ctrl o), exit (ctrl x) and reboot (sudo reboot)

3) Adding the time and date

It's all very well to use the file 'creation' date, however that can be lost if the photo is copied around from Pi to PC to backup drive etc. So instead we write the 'take a photo' script to set the photo file name to the current date and time, for example :-

#!/bin/bash

while true #for as long as true evaluates to true

do #do the following:

pixDATE=$(date +"%Y-%m-%d_%H%M%S.%3N")

raspistill -t 200 -o /home/pi/photos/$pixDATE+$pixTIME.jpg -n

done # loop back to do again

%N in the 'date' command gets the current time in nano-seconds and %3N reduces this to 3 digits i.e. mS

-t is the delay in mS (so 200mS for 1 photo at 5 fps)

(yes, that means the time stamp, calculated before taking the photo, will be 200mS 'too early',

however the photo sequence names will be in the exact correct order.)

-n means don't waste time generating a 'preview' for the display

The above script will keep running until it's generated sufficient files in the /home/pi/photos/ folder to fill up the entire SD card (at which point it crashes and your system becomes unusable). In the 'real' script, the /home/pi/photos/ folder would be redirected to a RAM disk (tmpfs) and a second script would be removing photos (i.e. discarding 'no motion' and moving 'motion' photos to the SD card - and a 3rd script removing them from SD and sending them off to a remote destination across the Ethernet) - at least as fast (so the RAM disk / SD card space 'never' runs out)

Whilst the above gives photos ascending date derived file names, one problem with the Pi is that it has no 'real time clock'. This means every time you turn it on it has to discover 'todays date'. If you have a Pi with an Internet connection (Model B with Ethernet, Model A with WiFi (or Ethernet dongle)) this is 'no problem' so long as it can reach a NTP Server (just like a Windows PC)

Note the date calculations will ONLY be correct so long as the Pi can reach the internet at least once (after power-on) and whist it's internal "fake-hwclock" doesn't 'slip' by more than 199 mS between Internet time updates.

Does the right time matter ? well it does if you intend to use your CCTV footage as any sort of 'evidence' in a Court of Law

If the Pi has connection to a PC (but not to the Internet), it is possible to have the PC 'send' it's own RTC time to the Pi.

An alternative is to install your own NTP Time Server service on your own LAN Server

If the Pi has no way to obtain the current time, you can set the time manually on the Pi by finding the 'fake-hwclock' data file (/etc/fake-hwclock.data) and change it's contents. NOTE that UNIX system clocks run in UTC (and adjusts the value to local time-zone prior to actual use or display, as necessary) NOTE also that you will have to 'kill' the 'hwclock' process to get access to it's data file (and then reboot for your manually entered value to take effect) = so the 'best' you can do manually is to get within a few seconds of the 'real' time - after which 'hwclock' may also 'drift' somewhat

The 'problem' is, of course, that it's all too easy for you (or the Pi) to 'get it wrong' - as some clever 'defense counsel' will be the first to point out - either by you forgetting to manually enter the value, entering the wrong value or having the Internet link go down during boot-up ..

To ensure the Pi keeps 'correct' time, a 'Raspberry Pi RTC' (Real Time Clock) module can be used (this communicates using 'i2c' and typically costs less than $2, eBay prices, China, post free). However, the only way to 'guarantee' the Pi has the right time (for use in evidence) is to use the 'Raspberry Pi GPS' module - these cost $15 or less (again, eBay prices, China, post free) == and then 'overlay' the time onto each camera photo frame image

Overlay the time onto camera images

1) The date/time can be added using the 'Text Overlay' function in the GPU. This is a relatively 'newly discovered' function, so ignore any 'how to' that's over 1 yr. old

If your CCTV footage is to be of any evidential use, you must have an accurate time and date 'stamped' into the video frame by frame. To do this, a good place to start is the V4L2 driver examples, especially example 9. Using MMAL, the 'path' used by the demo example 9 is "MMAL Camera - Buffer(I420) - Mix Image Overlay (600x100px) - Buffer (I420) - Encoder - File"

2) Standard CCTV systems add the time/date to an analogue video stream when recording it

If the Pi is being used as a 'classical' CCTV camera, then it will be transmitting analogue TV via it's RCA socket. If you don't have a dedicated CCTV recorder, and can't get the Pi to add the date/time using the GPU, you can 'intercept' the analogue video stream and use a VTI (Video Time Inserter) / OSD (On Screen Display), for example, using a PIC as per my DIY OSD/VTI Project

4) Motion detection

The 'goal' is to obtain a recording of any 'intruder' from a few seconds prior to 'detect' (since we assume the intruder will be well within the boundary before tripping the 'detect' threshold) until no further motion is found at all.

Good image quality increases the possibility of identification, however if this means we have to drop the frame rate too low the camera will likely 'miss' the chance of a 'best shot' (for example as the intruder glances toward the camera lens when he notices the LED blinking). This means frame rates above 3 per second (I use 5 fps), even if that means the quality has to be reduced to cope.

Of course the higher the data rates, the harder it is to 'keep up' with the 'motion detection'. Eventually you end up trading off 'detect accuracy' against 'detect speed' - the higher the accuracy, the longer it takes whilst the faster the detect, the lower the accuracy

One high speed 'motion detect' approach is to simply compare JPEG photo file sizes. If two (successive) photo files are 'the same', then nothing has changed. Of course it's unlikely the jpegs will be EXACTLY the same size - so there will need to be a 'threshold' - and that means we have to compare photos against some 'background template' (otherwise comparing successive photos will 'miss' slow changes, i.e. changes below the threshold)

If a separate PIR 'motion detector' is available (one is likely to be fitted to your flood lights), 'all' we need to do is use it to 'trigger' the Pi to start (saving) it's recording - however all PIR's suffer from some 'lag' with the result that the recording will start some seconds AFTER the intruder enters the PIR field of view. To address this, we typically end up 'aiming' the PIR just outside the camera FOV (at night, this turns on the lights), often with an additional PIR covering the actual FOV (to keep the recording going).

A solution to the 'lag' problem (during daylight) is to use a 'ring buffer' of a few seconds of video/photos held in RAM. When the trigger is seen, the Pi starts writing the oldest data in the buffer to SD card. Of course this doesn't help at night (unless street lighting etc. is sufficient for long exposure imaging)

Another drawback with PIRs is that they only trigger on (quite fast) movement, and typically even fail to 'spot' something that is suddenly removed (or added) to the scene (so long as that item doesn't keep moving).

To 'spot' slow movement, we can use software to analyse the camera output for 'differences' between the current image and some 'reference' image. Even the slowest movement will eventually 'trigger' some 'difference' threshold. Examining individual frames is easy on a MJPEG stream - and not too difficult on uncompressed AVI video stream but somewhat harder for a h.264 video stream. Fortunately, it is possible to extract still frames (even at lower resolution) at the same time as the Pi's GPU is generating the (full resolution) compressed video stream = see below). It is also possible to extract RAW when generating a sequence of .bmp, .png or .jpg still frames from the Pi. Another way to obtain high resolution images with fast motion detect is to use "YUV" mode. This delivers a composite image of a 'large' (50% of the image) Luminance and 'small' (25% each) U/V chrominance sub-frames. You can then perform 'motion detect' directly on the 1/4 sized U or V sub-components

What photo frame rates can be get ?

Instead of limiting the frame rate to the 'motion detection' speed, we generate sets of photos 'at max. frame rate'. The 'motion' detect software then takes a single 'sample' frame from each set to decide to save or discard that whole set. One possible method is outlined below

1) The Pi camera (& GPU) is set to take a continuous series of still images at maximum resolution = 2592×1944 (full sensor, full FOV), at full speed = 15fps LUV. 2) A second task compares the newest image 'now' with some 'no motion' standard (or with whatever was newest 'last time') and looks for 'differences'. If LUV was used, this is just a matter of subtracting the pixel Luminance values from one another (which works for both daylight/flood lights and at night/low light, when there is 'no' UV colour in the long-exposure shots). If the difference is 'zero' (actually, below the 'noise' threshold), we throw away everything prior to the latest image. If above the detect threshold we start a 3rd task that moves the images to SDHC card (this is faster than sending via LAN or USB) This means the time taken to do the analysis no longer matters so much - if it takes one second, we will be throwing away 15 images at a time, if half a second then 7 or 8 and if 2s then 30. The 'subtract' approach allows the definition of 'areas to pay special attention to' and 'areas to ignore' Of course this supposes that we have sufficient RAM to hold all the images 'in a buffer' whilst deciding what to 'throw away' - and also assumes we can save (move) the images we decide to keep faster than new ones are being generated ...

A alternative approach is to run the camera in video mode and have the Pi camera extract jpg 'stills' at the same time. Again, for 'motion detect' we just compare successive still images.

Data rate limitations

There is no problem saving HD video (the data rate of h.264 video is only 'about' 16mbps = 2 MB/s). However still frame RAW/ LUV is 7.5MB/frame so 15fps = 112.5MB/s ! Even if we dump the UV, that's still 5MB/frame of 'L' i.e. 75MB/s. The faster class 10 SD cards will support about 40MB/s, so (at best) we can empty the RAM buffer at half the rate at which new photos are being generated. This means, no matter how much RAM we have available, eventually new photos will start over-writing old photos that have not been saved yet (although the 1Gb B2/B3 could keep going for 15s or more). One alternative is high quality JPEG at about 2.4Mb/photo. At 15fps that's 'only' 36Mb/s (and a 'top end' Class 10 SDHC should just about keep up). Whatever approach is taken, we need to prevent overwriting, so the 'take a new photo' software will have to be coded to wait for RAM to become available (this means the frame rate will drop to match the 'save' rate - or the 'no motion detected' deletion rate.

Note that, whatever approach is used to 'detect' motion, once 'triggered' we are likely to need every CPU cycle to 'save' the photos. This means the Pi CPU will have no time for any further 'motion' checking i.e. the decision to 'stop recording' will have to be based on the elapsed time (rather than lack of motion)

For a guide to using a 'RAM ring buffer', go visit here, although (in practice) using the *nix tmpfs RAM disk is likely to be simpler

Getting images out of the Pi camera

The MMAL Project

The MMAL project allows access to the VideoCore (so you can perform some functions on the GPU )

Note. All output resolutions below 1296x976 use the sensor 2x2 binned mode (so 4x the 'sensitivity' plus a 50% reduction in noise at no extra cost)

Using V4L2, the commands:-

sudo modprobe bcm2835-v4l2 max_video_width=2592 max_video_height=1944

v4l2-ctl -p 15

v4l2-ctl -v width=2592,height=1944,pixelformat=I420

v4l2-ctl --stream-mmap=3 --stream-to=/dev/null --streamcount=1000

shows that the sensor is reading out at 15 or 16fps (and is genuinely reading the full 5MPix off the sensor and processing it to produce the output frames).

The file from V4L2 is raw yuv420. This file has no information on resolution, this needs to be specified.

To display it, try ffplay:-

ffplay -f rawvideo -pix_fmt yuv420p -video_size 2592x1944 -i video.yuv

Or you can split the files to frames and use 'convert' (from imagemagick) to jpeg, i.e.:

split -d -b $((3*2592*1944/2)) video.yuv frame--e

for i in frame-??; do

mv $i $i.yuv;

convert -size 2592x1944 -depth 8 -sampling-factor 2x2 $i.yuv $i.jpg;

doneAbove (top) generates 7.5Mb/frame (so 10 frames = 75Mb)

To generate mpjeg instead :-

v4l2-ctl -v width=2592,height=1944,pixelformat=MJPG

v4l2-ctl --stream-mmap=3 --stream-to=./video.mjpg --stream-count=50

MJPEG, is a 'video' codec and has a target bit-rate rather than specifying the normal 'compression' Q-factor. It tweaks the Q-factor per frame based on the bit-rate achieved so far. As long as you set the bit-rate high enough(*), then the image quality should be maintained reasonably.

NB. MJPEG is not an efficient codec, but it's the only 'full frame' option as H264 on the Pi is limited to 1920x1080 max.

From http://picamera.readthedocs.org/en/release-1.6/recipes2.html#rapid-capture-and-streaming

1) "capture_sequence()" is by far the fastest method (because it does not re-initialize an encoder prior to each capture). Using this method, the author has managed 30fps JPEG captures at a resolution of 1024x768 !!

2) Capturing images whilst recording

The camera is capable of capturing still images while it is recording video. However, if one attempts this using the 'stills capture' mode, the resulting video will have dropped frames during the still image capture. This is because regular stills require a 'mode change' (on the Video core), causing the dropped frames (this is the flicker to a higher resolution that one sees when capturing while a preview is running).

However, if the use_video_port parameter is used to force a video-port based image capture (see Rapid capture and processing) then the mode change does not occur, and the resulting video should not have dropped frames, assuming the image can be produced before the next video frame is due:-

import picamerawith picamera.PiCamera() as camera:

camera.resolution = (800, 600)

camera.start_preview()

camera.start_recording('foo.h264')

camera.wait_recording(10)

camera.capture('foo.jpg', use_video_port=True)

camera.wait_recording(10)

camera.stop_recording()

The above code should produce a 20 second video with no dropped frames, and a still frame from 10 seconds into the video. Higher resolutions or non-JPEG image formats may still cause dropped frames (only JPEG encoding is hardware accelerated).

5.6. Recording at multiple resolutions

The camera is capable of recording multiple streams at different resolutions simultaneously by use of the video splitter. This is probably most useful for performing analysis on a low-resolution stream, while simultaneously recording a high resolution stream for storage or viewing.

The following simple recipe demonstrates using the splitter_port parameter of the start_recording() method to begin two simultaneous recordings, each with a different resolution:-

import picamerawith picamera.PiCamera() as camera:

camera.resolution = (1024, 768)

camera.framerate = 30

camera.start_recording('highres.h264')

camera.start_recording('lowres.h264', splitter_port=2, resize=(320, 240))

camera.wait_recording(30)

camera.stop_recording(splitter_port=2)

camera.stop_recording()

There are 4 splitter ports in total that can be used (numbered 0, 1, 2, and 3). By default, the recording methods (like start_recording()) use splitter port 1, and the capture methods (like capture()) use splitter port 0 (when the use_video_port parameter is also True). A port cannot be simultaneously used for video recording and image capture so you are advised to avoid splitter port 0 for video recordings unless you never intend to capture images whilst recording.

Image sizes

-rw-r--r-- 1 pi pi 15116598 Jun 9 09:01 image.bmp (5 Mpixels x 3bytes(RGB) = 15Mb, all images same size) -rw-r--r-- 1 pi pi 3735046 Jun 9 08:56 image.jpg (100% quality - a less 'busy' image at 100% is 2.5Mb) -rw-r--r-- 1 pi pi 10204383 Jun 9 08:55 image.png (loss-less compression, so size differs between images) -rw-r--r-- 1 pi pi 21520695 Jun 9 09:02 imageRAW.bmp -rw-r--r-- 1 pi pi 10115070 Jun 9 08:56 imageRAW.jpg -rw-r--r-- 1 pi pi 16636117 Jun 9 08:55 imageRAW.png It is to be noted that the 'RAW' is actually 5Mpixels x 10bits, so it 'only' adds 6.25Mb to the image size. Note also, .jpg is up to 5x FASTER than other formats (this is because the GPU can process into jpg 'on the fly', so the 'total photo time' == the data transfer time).

Installing the 'motion' (detection software) on the Pi

The 'standard' for Open Source motion detection/surveillance software is 'motion'. It works fine on the PC and can be made to work on the Pi (but expect seconds per frame, rather than frames per second)

The following is a 'composite' of various on-line 'How-To' articles :-

Start by logging into your raspberry as user 'pi', p/w 'raspberry' and type :-sudo apt-get install motion. A number of packages will be installed during the installation process; just type "y" to proceed with the installation.

(As of Q2 2014, the standard version of motion did not yet support the Raspberry Pi camera module.

So you need to download and install the Pi camera support modules from dropbox as a separate step :-

cd /tmp

sudo apt-get install -y libjpeg62 libjpeg62-dev libavformat53 libavformat-dev libavcodec53 libavcodec-dev libavutil51 libavutil-dev libc6-dev zlib1g-dev libmysqlclient18 libmysqlclient-dev libpq5 libpq-dev

wget https://www.dropbox.com/s/xdfcxm5hu71s97d/motion-mmal.tar.gz

Unpack the downloaded file in the /tmp directory:tar zxvf motion-mmal.tar.gz

After unzipping, update the previous installed motion with the downloaded build:

sudo mv motion /usr/bin/motion

sudo mv motion-mmalcam.conf /etc/motion.conf)

Next enable the motion daemon so that motion will always run by editing the config file:

sudo nano /etc/default/motion

and change the line to:

start_motion_daemon=yes

We're pretty sure that the official build of motion will shortly also support the Raspberry camera module as well.

To edit the motion configuration file use: "sudo nano /etc/motion.conf"

Note: in the standard motion installation, the motion.conf is in /etc/motion/, but in the special motion-mmal build from dropbox-url (see above) it's in /etc/. If you follow this tutorial with all steps, this is no problem at all.

Be sure to have the file permissions correct: when you install motion via ssh while being logged in as user "pi", you need to make sure to give the user "motion" the permissions to run motion as service after reboot:sudo chmod 664 /etc/motion.conf

sudo chmod 755 /usr/bin/motion

sudo touch /tmp/motion.log

sudo chmod 775 /tmp/motion.log

We've made some changes to the motion.conf file to fit our needs. Our current motion.conf file can be downloaded here: raspberry_surveillance_cam_scavix.zip.

Just download, unzip and save as /etc/motion.conf if you would like to use the exact config options we describe below.Some of the main changes in our motion.conf are:Make sure that motion is always running as a daemon in the background:

daemon on

We want to store the log-file in /tmp instead (otherwise autostart user won't be able to access it in /home/pi/ folder):logfile /tmp/motion.log

As we want to use a high quality surveillance video, we've set the resolution to 1280x720:

width 1280

height 720

The max. frame rate you can expect motion to cope with is about 2 pictures per second:

framerate 2

This is a very handy feature of the motion software: record some (2 in our configuration) frames before and after the motion in the image was detected:pre_capture 2

post_capture 2

We don't want endless movies. Instead, we want to have max. 10 minutes slices of the motion videos. This config option was renamed from max_movie_time to max_mpeg_time in motion. If you use the motion-mmal build, this one will work. If you get an error 'Unknown config option "max_mpeg_time"' either change this one to max_movie_time or make sure to really use the motion-mmal build as shown above.max_mpeg_time 600As some media players like VLC are unable to play the recorded movies, we've changed the codec to msmpeg4. Then, the movies play correctly in all players:

ffmpeg_video_codec msmpeg4

Enable access to the live stream from anywhere. Otherwise only localhost (= the Raspberry device) would be allowed to access the live stream:stream_localhost off. If you want to protect the live stream with a username and password, you should enable this:

stream_auth_method 2

stream_authentication SOMEUSERNAME:SOMEPASSWORD

All configuration parameters are explained in detail in the motion config documentation.

After your changes to the motion.conf, reboot the Raspberry:

sudo reboot

After the reboot, the red light of the camera module should be turned on, which shows that motion currently is using the camera to detect any movement.

Using a Model A with 'motion'

The main problem with the A is the very limited RAM available for both temp image storage (out of the initial 256Mb, the GPU will take 128Mb). With still frames at 5Mb each, you will be lucky to hold 1 second at the max frame rate of 15fps.

Start with MINIBIAN, and on a Linux PC use the dcfldd command to prepare the SD card, but you can use plain dd also:

dcfldd bs=1M if=/tmp/2013-10-13-wheezy-minibian.img of=/dev/mmcblk0

You will need a USB-Ethernet dongle as the Model A does not have an ethernet port (and WiFi is not supported on MINIBAN (wpasupplicant not installed) anyway).

The next step is to put the SD card in the Pi and boot up and ssh to the Pi and log in with user root (default password: raspberry)First, expand the ext4 partition to the card limit (8Gb or whatever) - adding some other file system (eg xfs) as a separate partition also means adding additional tools and processes to manage the partition (which takes a lot of memory relative to the little available)Issue the following commands to set time zone and update the system:dpkg-reconfigure tzdata (select your closest timezone)

apt-get update

dpkg-reconfigure locales (select your locale)

apt-get upgrade

apt-get autoremove

apt-get autoclean

Now, we need to enable the camera. I used this information and created a script as raspi-config doesn't seem to work on these minimal images.nano enable_camera.sh (paste the contents from the link)

chmod 755 enable_camera.sh

apt-get install lua5.2 (needed as it is used in the script)

./enable_camera.sh

We can now test the camera by taking an image:raspistill -o image.jpg The camera should light up it’s RED light and take a photo. If we are successful here, we will now try to get motion to work. Go ahead and reboot in the meantime.First we start by installing the dependencies required by motion (referenced here):

apt-get install -y libjpeg62 libjpeg62-dev libavformat53 libavformat-dev libavcodec53 libavcodec-dev libavutil51 libavutil-dev libc6-dev zlib1g-dev libmysqlclient18 libmysqlclient-dev libpq5 libpq-dev

Since motion does not yet support the Pi, we need to keep following the above referenced location and get the special build of motion from the Dropbox link, un-compress it and put it in the executable path:

wget https://www.dropbox.com/s/xdfcxm5hu71s97d/motion-mmal.tar.gz

tar zxvf motion-mmal.tar.gz

mv motion /usr/bin/.

You can probably use the settings in the file from Dropbox above, however I used the config file and init script from this location.

cp motion.conf /etc/.

cp motion /etc/init.d/.

chmod 755 /etc/init.d/motion

The above conf file has a video location (if you search for CAM1 you will find it) you will need to modify to determine where to store the images and videos the camera captures.

The above motion init file has a sleep 30 for start option for the CAM1 location to be mounted. You can comment this out if you are storing images on the card or somewhere else that does not require this delay.

The init script will do a 'chuid' to run this as user motion, therefore we need to create that user. I created it as a system user with no shell and added it to the video group so it is able to access the camera module.

useradd motion -rs /bin/false

usermod -a -G video motion

You should now be able to start and stop motion:

/etc/init.d/motion start

/etc/init.d/motion stop

To set it up so that it auto-starts at boot up, execute this command:

update-rc.d motion defaults

Next up, I will attempt to reduce unnecessary usage of RAM on this Model A to give motion as much as possible following this guide. Currently I get about 20MB free when motion is running, which by subtraction means motion uses 40MB to run. Remember also that the GPU takes half (128MB) of the total RAM when you need to use the camera. I will do the following changes from the referenced guide above to get back about 13.5MB RAM:

Replacing OpenSSH with Dropbear | Save: +10MB RAM

Remove the extra tty / getty’s | Save: +3.5 MB RAM

Disable IPv6

Replace Deadline Scheduler with NOOP Scheduler

BTW, you can check the current version of the firmware etc using the following two commands. Not sure how you would compare if you have the latest or not. If you need to update it, you can use the instructions from the previous referenced guide.

/opt/vc/bin/vcgencmd version uname -a

This gives me the following:

Sep 1 2013 23:27:46

Copyright (c) 2012 Broadcom

version 4f9d19896166f46a3255801bc1834561bf092732 (clean) (release)Linux raspberrypi 3.6.11+ #538 PREEMPT Fri Aug 30 20:42:08 BST 2013 armv6l GNU/Linux

Here are the commands for the changes I made in the order I did them (explanations can be found from the referenced link):

apt-get install dropbear openssh-client/etc/init.d/ssh stop

sed -i 's/NO_START=1/NO_START=0/g' /etc/default/dropbear/etc/init.d/dropbear startexitssh root@xxx.xxx.x.xxx

apt-get purge openssh-server

sed -i '/[2-6]:23:respawn:\/sbin\/getty 38400 tty[2-6]/s%^%#%g' /etc/inittab

sed -i '/T0:23:respawn:\/sbin\/getty -L ttyAMA0 115200 vt100/s%^%#%g' /etc/inittab

echo "net.ipv6.conf.all.disable_ipv6=1" > /etc/sysctl.d/disableipv6.conf

echo 'blacklist ipv6' >> /etc/modprobe.d/blacklistsed -i '/::/s%^%#%g' /etc/hosts

sed -i 's/deadline/noop/g' /boot/cmdline.txt

reboot

The next step I will do is set this up to work with WiFi (with a hidden SSID). If you have a Pi with Ethernet port or will use a USB-Ethernet dongle, then you may not require this. The Linksys USB10T works out of the box. I will however be using a Edimax nano WiFi adapter. My setup is from this reference.

I will also set this up to store images on my Synology NAS and try to get the Surveillance Station app on the NAS to work as described here.

(DONE – works like a charm)

Finally, it goes without saying that without the hard work of all the folks referenced here (and many more), this would have been a whole lot tougher if not impossible for me to get going.

Accessing i/o pins from shell script

To access any of the i/o pins we first have to 'export' them to the file system using the export file I mentioned above – for some reason they are not exposed by default. You can then access them from a Bash shell script, using sysfs (which part of the Raspbian operating system) The 'export' (and 'unexport') of pins must be done as root. To change to the root user see below: To change back, the word exit must be entered. sudo -i Export creates a new folder for the exported pin, and creates files for each of its control functions (i.e. active_low, direction, edge, power, subsystem, uevent, and value). Upon creation, the control files can be read by all users (not just root), but can only be written to by user root, the file's owner. Nevertheless, once created, it is possible to allow users other than root, to also write inputs to the control files, by changing the ownership or permissions of these files. Changes to the file's ownership or permissions must initially be done as root, as their owner and group is set to root upon creation. Typically you might change the owner to be the (non root) user controlling the GPIO, or you might add write permission, and change the group ownership to one of which the user controlling the GPIO is a member. By such means, using only packages provided in the recommended Raspbian distribution, it is possible for Python CGI scripts, which are typically run as user nobody, to be used for control of the GPIO over the internet from a browser at a remote location. #!/bin/sh# GPIO numbers should be from this list # 0, 1, 4, 7, 8, 9, 10, 11, 14, 15, 17, 18, 21, 22, 23, 24, 25# Note that the GPIO numbers that you program here refer to the pins # of the BCM2835 and *not* the numbers on the pin header. # So, if you want to activate GPIO7 on the header you should be # using GPIO4 in this script. Likewise if you want to activate GPIO0 # on the header you should be using GPIO17 here.# Set up GPIO 4 and set to output echo "4" > /sys/class/gpio/export echo "out" > /sys/class/gpio/gpio4/direction# Set up GPIO 7 and set to input echo "7" > /sys/class/gpio/export echo "in" > /sys/class/gpio/gpio7/direction# Write output echo "1" > /sys/class/gpio/gpio4/value# Read from input cat /sys/class/gpio/gpio7/value # Clean up echo "4" > /sys/class/gpio/unexport echo "7" > /sys/class/gpio/unexport So if we want to be able to access pin 4, we would type echo 4 > /sys/class/gpio/export (all these commands must be run as root).This would cause a new directory entry, /sys/class/gpio/gpio4, to appear in the file system. There are several items in the gpio4 directory, but of immediate interest are direction and value.To specify that we want to use the pin as an output, we can do echo out > /sys/class/gpio/gpio4/direction. Then we can set the pin high or low by echoing a 0 or 1, respectively, to /sys/class/gpio/gpio4/value. To specify that we want to use the pin as an input, we can do echo in > /sys/class/gpio/gpio4/direction. Then we can sense if the pin as high (1) or low (0) by using > cat /sys/class/gpio/gpio4/value. To loop for ever whilst sensing eg i/o line 4 ...while true; do VAL = 'cat /sys/class/gpio/gpio4/value' if [ VAL == 1 ] then echo "Hi found" else echo "Lo found" fi /# wait 2s before checking again sleep 2 done Switch wiring 3.3v | 100k | switch -- 3k3 -- i/o pin | Gnd The following will turn i/o pin 4 on/off at max speed (about 3.4KHz) generating a square wave. Faster i/p (up to 22MHz) can be achieved in C code (using native Libraries) #!/bin/shecho "4" > /sys/class/gpio/export echo "out" > /sys/class/gpio/gpio4/directionwhile true do echo 1 > /sys/class/gpio/gpio4/value echo 0 > /sys/class/gpio/gpio4/value done Shell script - take 2 You need the wiringPi library from https://projects.drogon.net/raspberry-pi/wiringpi/download-and-install/. Once installed, there is a new command gpio which can be used as a non-root user to control the GPIO pins. The man pageman gpiohas full details, but briefly: gpio -g mode 17 out gpio -g mode 18 pwm gpio -g write 17 1 gpio -g pwm 18 512 The -g flag tells the gpio program to use the BCM GPIO pin numbering scheme (otherwise it will use the wiringPi numbering scheme by default). The gpio command can also control the internal pull-up and pull-down resistors: gpio -g mode 17 up This sets the pull-up resistor - however any change of mode, even setting a pin that's already set as an input to an input will remove the pull-up/pull-down resistors, so they may need to be reset. Additionally, it can export/un-export the GPIO devices for use by other non-root programs - e.g. Python scripts. (Although you may need to drop the calls to GPIO.Setup() in the Python scripts, and do the setup separately in a little shell script, or call the gpio program from inside Python). gpio export 17 out gpio export 18 in These exports GPIO-17 and sets it to output, and exports GPIO-18 and sets it to input. And when done: gpio unexport 17 The export/unexport commands always use the BCM GPIO pin numbers regardless of the presence of the -g flag or not. If you want to use the internal pull-up/down's with the /sys/class/gpio mechanisms, then you can set them after exporting them. So: gpio -g export 4 in gpio -g mode 4 up You can then use GPIO-4 as an input in your Python, Shell, Java, etc. programs without the use of an external resistor to pull the pin high. (If that's what you were after - for example, a simple push button switch taking the pin to ground.) A fully working example of a shell script using the GPIO pins can be found at http://project-downloads.drogon.net/files/gpioExamples/tuxx.sh.

Remote viewing

One approach is to take still images, add an overlay and 'tweet' them ! For details, see here. However don't expect any sort of 'real time' feed :-)

(+) Viewing a video stream

You can, of course, go for HD CCTV (or HD-SDI as they call it), which is full HD resolution, for an even higher price